During the last week of August, I had blogged about how to get your on-premise database to your SQL Server instance running on an Azure virtual machine. I had run into a few issues while trying to run the wizard provided by Management Studio.

The First Stumble

This error is easy to circumvent and pretty much mentioned in the online documentation. The error message would read as:

Failed to locate a SQL Server of version 12.0.2000 or later installed on the remote machine. Please verify that a SQL Server of the same or higher version than the source SQL Server is installed on the remote machine.

The above error is self-explanatory. There is a requirement that the source database engine version be lower or equal to the version of the SQL Server instance running on Azure. Eg. You cannot deploy a database from a SQL Server 2014 instance to a SQL Server 2012 instance running on an Azure VM.

The Second Stumble

The second common error that you might run into is:

The Cloud Adapter port configuration is not valid. Verify the virtual machine endpoint configurations.

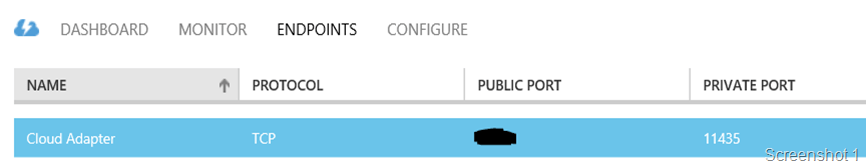

The above error will be encountered when the endpoint is not configured for the Azure virtual to accept connections from the outer realm! This can be easily rectified by adding a TCP endpoint to your Azure virtual machine for 11435 which is the port that the SQL Server Cloud Adapter Service is listening on. This is also mentioned in the online documentation. Once you have created the endpoint for your Azure virtual for your on-premise server to connect with the Cloud Adapter service, your endpoint configuration should look like the one in the screenshot below:

The Third Stumble

The next issue could be with permissions/authentication or it might not be as easy as it seems.

Cloud Adapter operation failed due to invalid authentication. Verify the virtual machine name, user name, and password.

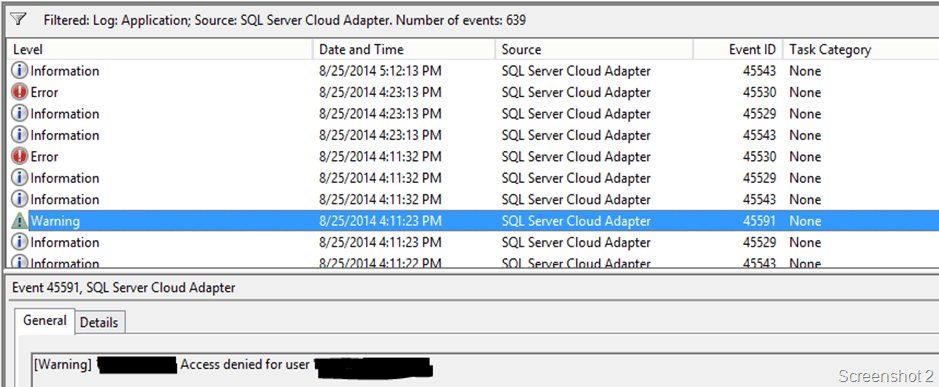

So the first thing to check if you have the correct account name and password. If it is due to an authentication error, then the application event log of the Azure Virtual Machine will show the following error with the source as SQL Server Cloud Adapter service as shown in Screenshot 2. The text of the error message is mentioned below.

Access denied for user <user name>

The other error that you might encounter is when the SQL Server Cloud Adapter service tries to enumerate the database engines installed on the virtual machine. The error would still be talking about the authentication which is reported by the management studio wizard but a little investigation into the application event logs of the virtual machine will show the following error:

[Error] <ip address> Exception in GetSqlInstances(): SQL Server WMI provider is not available on <machine name>.. Stack trace: at Microsoft.SqlServer.Management.Smo.Wmi.ManagedComputer.TryConnect()

at Microsoft.SqlServer.Management.Smo.Wmi.WmiSmoObject.get_Proxy()

at Microsoft.SqlServer.Management.Smo.Wmi.WmiSmoObject.EnumChildren(String childTypeName, WmiCollectionBase coll)

at Microsoft.SqlServer.Management.Smo.Wmi.ServerInstanceCollection.InitializeChildCollection()

at Microsoft.SqlServer.Management.CloudAdapter.Tasks.GetSqlInstances()

at Microsoft.SqlServer.Management.CloudAdapter.CloudAdapter.GetSqlInstances(String username, String password). Inner Exception: Invalid namespace .

The above error clearly states that the GetSqlInstances() method failed. Microsoft.SqlServer.Management.Smo.Wmi namespace contains classes that provide programmatic access to the Windows Management Instrumentation (WMI) from an SMO application. I had talked about needing the shared management objects in an earlier post. The SQL Server 2014 WMI provider is also required which is available by installing the client connectivity components from any SQL Server 2014 setup including SQL Server Express. The components that I had installed were:

a. Client Tools Connectivity

b. Client Tools Backwards Compatibility

If you are not sure if you have the WMI provider, then look for the file “C:\Program Files (x86)\Microsoft SQL Server\120\Shared\sqlmgmproviderxpsp2up.mof“. This is the SQL Server 2014 MOF file. Another way to test if the WMI provider is working without running the wizard every time and have it fail is to run the PowerShell commands below on your Azure Virtual Machine. This script will tell you where the instance enumeration being performed by the deployment wizard will work or fail.

[System.reflection.assembly]::LoadWithPartialName("Microsoft.SqlServer.Smo")

[System.Reflection.Assembly]::LoadWithPartialName("Microsoft.SqlServer.SqlWmiManagement")

$m = New-Object ('Microsoft.SqlServer.Management.Smo.Wmi.ManagedComputer') '.'

foreach ($svi in $m.ServerInstances)

{

$svi.Name;

}

This post was intended to document that common issues that you might run into while deploying a database from an on-premise SQL Server instance to a SQL Server instance running on an Azure Virtual Machine.