Management Data Warehouse provides a mechanism to collect Perfmon and DMV outputs and store them in a SQL Server database to help you troubleshoot performance issues that you might have faced in the past. This is quite helpful for intermittent performance issues that you might face on you SQL Server instances as it might not be possible to have someone monitoring the system 24X7 to troubleshoot the performance problem when it is occurring.

Management Data Warehouse provides two ways of collecting data:

1. Cached mode – This stores the Collection Set data in a .cache file in the cache file directory which is uploaded to the MDW database based on the schedule that you defined for your Collection Set.

2. Non-cached mode – This method of collection data using a Collection Set doesn’t depend on local cache. It collects the data pertaining to the Collection Set and uploads the data based on two different configuration options: On Demand or based on a schedule.

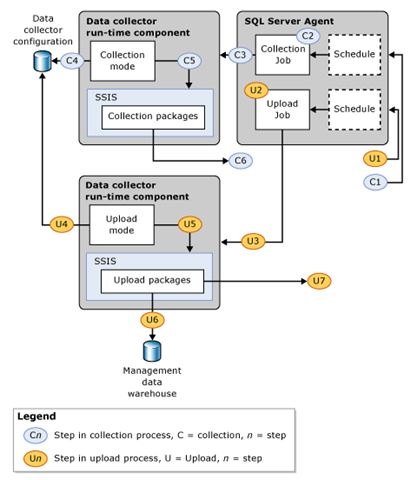

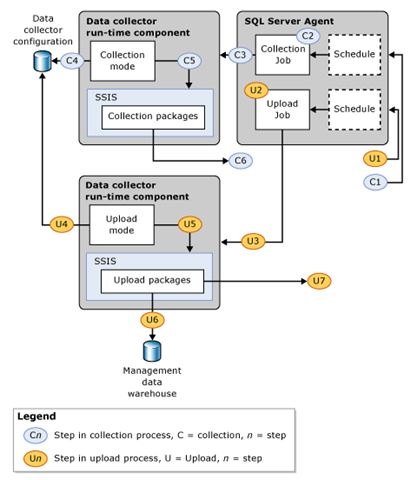

The screen shot (left) from Books Online of the run-time processing of the MDW jobs shows how the data is uploaded to the MDW database. When you have a highly transactional system with large number of concurrent connections to your SQL Server instance or a lot of processes running on the Windows Server, the amount of data collected by the System Data Collection Sets can be quite large for a single iteration especially for the Server Activity system data collection set.

So what can happen if the .cache files become very large. Let’s say your collection frequency is set to every 15 minutes. This means that the Data Collection set will collect data every 15 minutes. The scheduled upload will then use this cache file to upload the data using the SSIS upload package(s).

Now what happens if you miss an upload schedule because the upload job ran longer than 15 minutes. This means that your .cache file now has 2 sets of data to be uploaded. This cumulative addition of successive collection sets over a period of time with missed upload schedules can cause a problem once the upload job takes more than 60 minutes. The SSIS package(s) are hard-coded to timeout after 60 minutes of execution. Then you are stuck with cache data in your cache folder which cannot be uploaded.

When the SSIS package times out after 60 minutes of execution, you will get the following error in the job history for the job associated with the upload package:

Server Activity,Error,4861,Server Activity,,Error,The thread “ExecMasterPackage” has timed out 3600 seconds after being signaled to stop.,Upload,17/05/2011 13:11:37,,17/05/2011 14:11:38,3601,<server name>,,,

So what are your options here.

Option 1

1. Change the Server Activity collection to non-cached – This change would ensure that the data is uploaded as soon as it is collected without using an interim cache file.

2. Increase the collection frequency – The collection frequency is set to default of 15 seconds. This can be increased to 30 seconds or higher to prevent very large data being collected between the scheduled upload intervals.

3. Modify the sp_purge_data stored procedure for the purge job to run faster – An updated version of sp_purge_data is available here. This will ensure that the purge job completes faster.

Option 2

Evaluate the perfmon counters being collected in the Server Activity collection set. Find out which perfmon counters are required and then create a custom collection set to capture this data with a non-cached mode for data collection.

You can create your custom perfmon collection set as the default Server Activity collection set has a large number of perfmon counters from which data is collected and uploaded.

Below is a custom collection set created for Performance Monitor counters. I have shown how to collect Server specific perfmon counters and SQL instance specific perfmon counters.

use msdb;

Begin Transaction

Begin Try

Declare @collection_set_id_1 int

Declare @collection_set_uid_2 uniqueidentifier

EXEC [dbo].[sp_syscollector_create_collection_set]

@name=N'Perfmon Counter Collection Set',

@collection_mode=1,

@description=N'Collects Perfmon Counters ',

@target=N'',

@logging_level=0,

@days_until_expiration=7,

@proxy_name=N'',

@schedule_name=N'CollectorSchedule_Every_5min',

@collection_set_id=@collection_set_id_1 OUTPUT,

@collection_set_uid=@collection_set_uid_2 OUTPUT

Select @collection_set_id_1, @collection_set_uid_2

Declare @collector_type_uid_3 uniqueidentifier

Select @collector_type_uid_3 = collector_type_uid From [dbo].[syscollector_collector_types] Where name = N'Performance Counters Collector Type';

Declare @collection_item_id_4 int

EXEC [dbo].[sp_syscollector_create_collection_item]

@name=N'Logical Disk Collection and SQL Server CPU',

@parameters=N'<ns:PerformanceCountersCollector xmlns:ns="DataCollectorType">

<PerformanceCounters Objects="LogicalDisk" Counters="Avg. Disk Bytes/Read" Instances="*" />

<PerformanceCounters Objects="LogicalDisk" Counters="Avg. Disk Bytes/Write" Instances="*" />

<PerformanceCounters Objects="LogicalDisk" Counters="Avg. Disk sec/Read" Instances="*" />

<PerformanceCounters Objects="LogicalDisk" Counters="Avg. Disk sec/Write" Instances="*" />

<PerformanceCounters Objects="LogicalDisk" Counters="Disk Read Bytes/sec" Instances="*" />

<PerformanceCounters Objects="LogicalDisk" Counters="Disk Write Bytes/sec" Instances="*" />

<PerformanceCounters Objects="Process" Counters="% Privileged Time" Instances="*" />

<PerformanceCounters Objects="Process" Counters="% Processor Time" Instances="*" />

<PerformanceCounters Objects="Process" Counters="% User Time" Instances="*" />

<PerformanceCounters Objects="$(INSTANCE):Buffer Manager" Counters="Page life expectancy" Instances="*" />

</ns:PerformanceCountersCollector>',

@collection_item_id=@collection_item_id_4 OUTPUT,

@frequency=5,

@collection_set_id=@collection_set_id_1,

@collector_type_uid=@collector_type_uid_3

select @collection_item_id_4

Commit Transaction;

End Try

Begin Catch

Rollback Transaction;

DECLARE @ErrorMessage NVARCHAR(4000);

DECLARE @ErrorSeverity INT;

DECLARE @ErrorState INT;

DECLARE @ErrorNumber INT;

DECLARE @ErrorLine INT;

DECLARE @ErrorProcedure NVARCHAR(200);

SELECT @ErrorLine = ERROR_LINE(),

@ErrorSeverity = ERROR_SEVERITY(),

@ErrorState = ERROR_STATE(),

@ErrorNumber = ERROR_NUMBER(),

@ErrorMessage = ERROR_MESSAGE(),

@ErrorProcedure = ISNULL(ERROR_PROCEDURE(), '-');

RAISERROR (14684, @ErrorSeverity, 1 , @ErrorNumber, @ErrorSeverity, @ErrorState, @ErrorProcedure, @ErrorLine, @ErrorMessage);

End Catch;

Reference:

How to: Create a Custom Collection Set That Uses the Generic T-SQL Query Collector Type

Using SQL Server 2008 Management Data Warehouse for database monitoring in my application